Soo.. we made progress!

And as promised, we keep you updated.

But what to tell? As CTO I tend to give too many details because to me they are all a breakthrough and I am excited about each and every one of them. So I have decided to give them all to you. Because first of all it’s exciting new tech and as such an interesting read. Secondly, I wouldn’t do my team much justice if I left something out since they are all great achievements. And lastly, I think the reader of this blog is perfectly capable of filtering themselves what is important to them. Who am I to decide what you feel is important. I won’t give you all the dirty details of course because I wish every competitor the same enjoyment about discovery and getting new tech working.

In our last blog, we talked about three new technologies that will give birth to game-changing applications for all humans. These technologies are artificial intelligence, simulation engines and game engines. Along this path, I will show you our latest developments.

This platform, what is it again?

Before I go into detail about the progress, it’s good to give you a little reminder of what we’re trying to achieve with this platform.

This platform provides every user with the ability to use these new technologies as they see fit.

So, e.g. AI will not just be a list of recommendations. In fact, it will be a kind of personal assistant that does thy bidding. Further, the platform gives you an environment where you can build your own home, office or whatever workspace or club space. You can do this by just providing Styx with the address and maybe a few pictures of the inside made with your phone. Then you can customise it as you see fit, changing the interior design or optimise the building or create an entirely different floorplan. And this is just for starters, the number of use cases is truly countless. I often tend to say that the use cases are only limited by your own creativity and imagination. The platform is free to play and will be deployed on every device like a smartphone, tablet, desktop, TV or VR headset.

Oké so enough of this, without further ado, what did we achieve and how far are we.

Artificial Intelligence, …. the birth of Styx

The first thing that we needed to do was to give Styx a voice. We can use her by typing a request or speak to her, but how do we want her to sound? Although that might be trivial to you, for us that was a kinda big deal. Her sound has an effect on how we see her. So the question then is, who is Styx? The name Styx refers to a goddess of ancient greek times. She gave birth to Nike (Victory) and Kratos (Strength), among others. No small feat, but our goddess is kind, willing to serve and curious. So how do we translate this to her voice? Yes, how indeed, the only thing we could do was to listen to many examples of voices out there and then lastly create our own version of it. After this, Styx needed to learn to listen to us. For this, she needed to be able to classify text samples via our own NLP transformer models based on BERT and a bunch of others with intent recognition and entity recognition and so on. We got that to work!

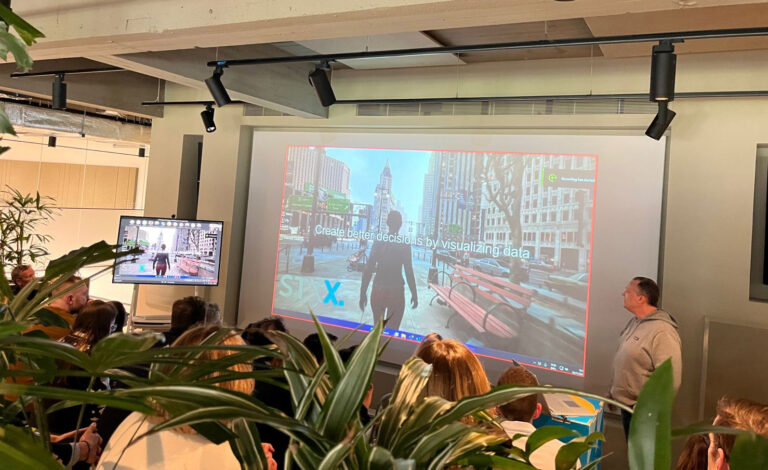

Next step was to create a pipeline from our model to the game engine Unreal from Epic. A goddess not only has to speak with a specific voice but also her looks and movement should reflect her personality. So we get a more humanlike feeling when talking to her. This pipeline is now also in effect and we successfully managed to get a streamed data flow. Right now she is learning to do all kind of tasks like making appointments with people inside our companies Styx and K & R but also outside our companies. Because well hey, this is one of the most time-consuming tasks to get an appointment scheduled over lots of agendas. So expect a call from her soon if you work with us :). She also will be a tour guide when we show off the prototype platform to investors for the next step in our development to get our platform launched. We have added some training files so you can hear how she moved from baby babbling to a voice which we are thrilled with (make sure your sound is turned on to listen to it). Obviously, it can be better, but that just takes time. We keep training her, so her speech will become even more fluent soon.

The next thing we are working on has to do with data analysis. We call it a real-estate compiler. This will be a separate A.I. model that will analyse unlabeled public data like the data from a point cloud to extract the data we need for model building. The goal is that a user on the platform only has to give Styx an address of the building you want to replicate in the private user space.

Simulation with real-world physics

Using a physics engine to simulate real-world events is not very new. We do that already for more than 20 years. But doing it in real-time that is something different. To optimise a building, it is obvious that we would need to calculate how much energy is used to bring the user a comfortable experience in his or her facility. And that we would need to calculate, if you change something in the design, what as a result of this change will be the relative outcome, to give any meaning to the word “optimisation”. Further, we also would need to simulate the behaviour of the occupants of this building during a certain time frame (let’s say a day or a season) to give the prediction of the calculation more meaning.

And since we’re at it, we also would like to calculate more variables than “just” the energy consumption. We also need to calculate artificial light, the airflow, CO2 production, human comfort, the life cycle costs and so on. So if we change something in the building say, e.g. the size of the windows or number of solar panels, we would need to calculate all these things we mentioned in real-time. This with acceptable responsiveness, so you don’t have to wait forever for the results.

A daunting task you might think, well you don’t think wrong it’s a challenge indeed. But we are nearly there. We managed to create a bi-directional pipeline between our game-engine and our physics engine. So, we now can change anything in the building on the platform and get the model in our physics-engine updated. The physics-engine then calculates and simulates the relevant variables and sends the results back. Further, we covered almost all of the changes in design we would want to simulate concerning MEP (mechanical installations, electrical installations and plumbing) and alterations of the building. The thing we now need to do is to automate all the processes of change and calculation. On top of that, we need to QA all the algorithms we use to make sure we get reliable outcomes. And of course, we need to animate and visualise all the results so everybody can understand it very easily. We also work now on artificial lighting and the airflow. So, we still have a way to go, but nonetheless, this was a big step in our development.

Game-engine Unreal 4

All the developments and personal breakthroughs mentioned earlier come together in the game-engine Unreal 4 from Epic Games inc. In here we can visualise, create worlds, create animations well basically create the entire user experience. The game-engine comes right out of the box with a lot of functionality that we use. But we want more, of course. For our platform to work, we need to create pipelines for data transfer from outside the engine and visualise this in real-time. This is not in the box, and we needed to develop a backend database structure that would allow for this to happen. We used MS Azure and Playfab, to name a few. But not only the database structure also all kinds of little utilities that make this streamed data transfer possible. Deploying a Rhino/Grasshopper server and use Grasshopper compute as REST API to be able to automate all the tasks and simulations we want to use. Well, I will not go in further detail suffice to say that also here is made a big step forwards to our prototype.

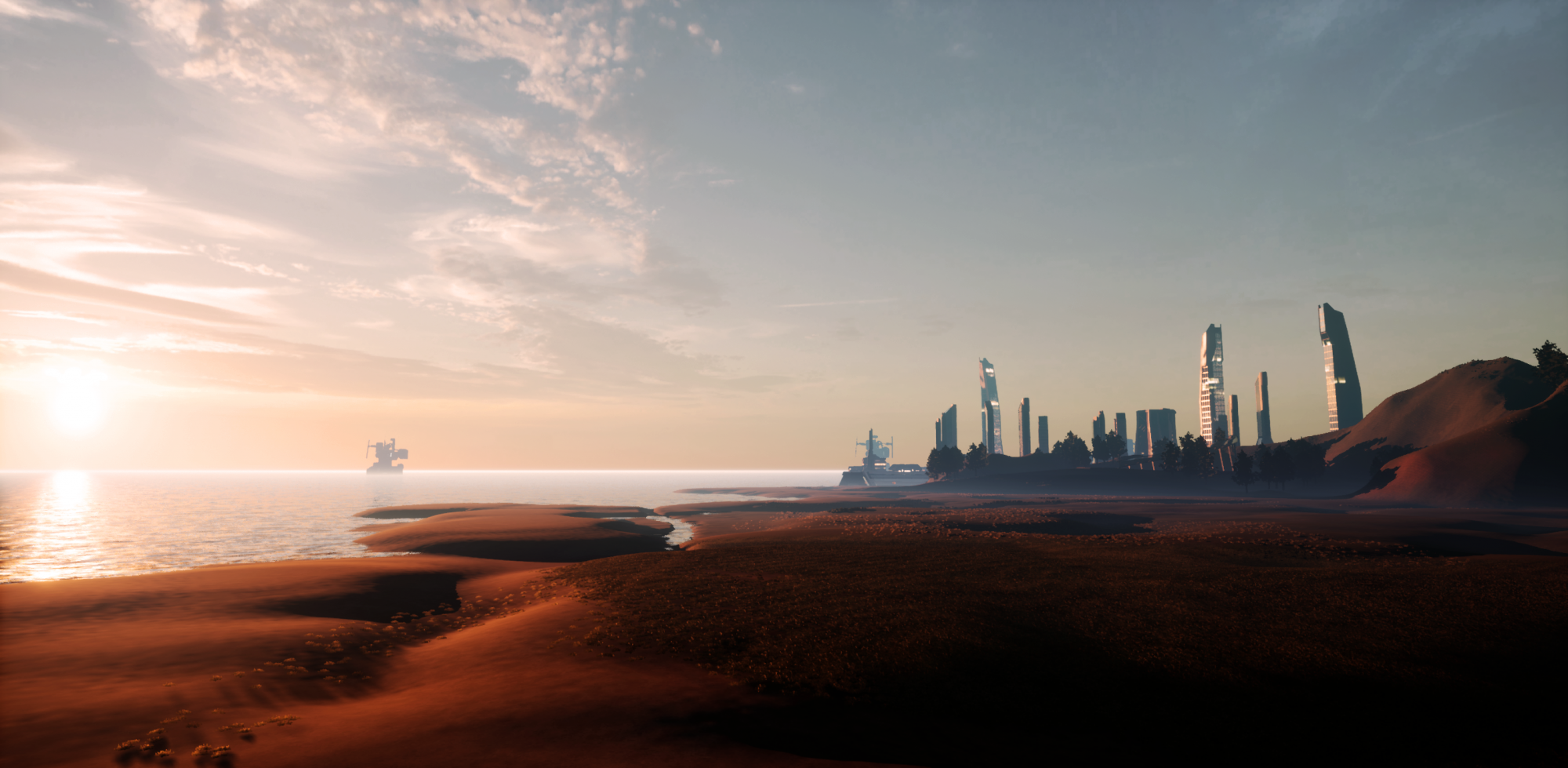

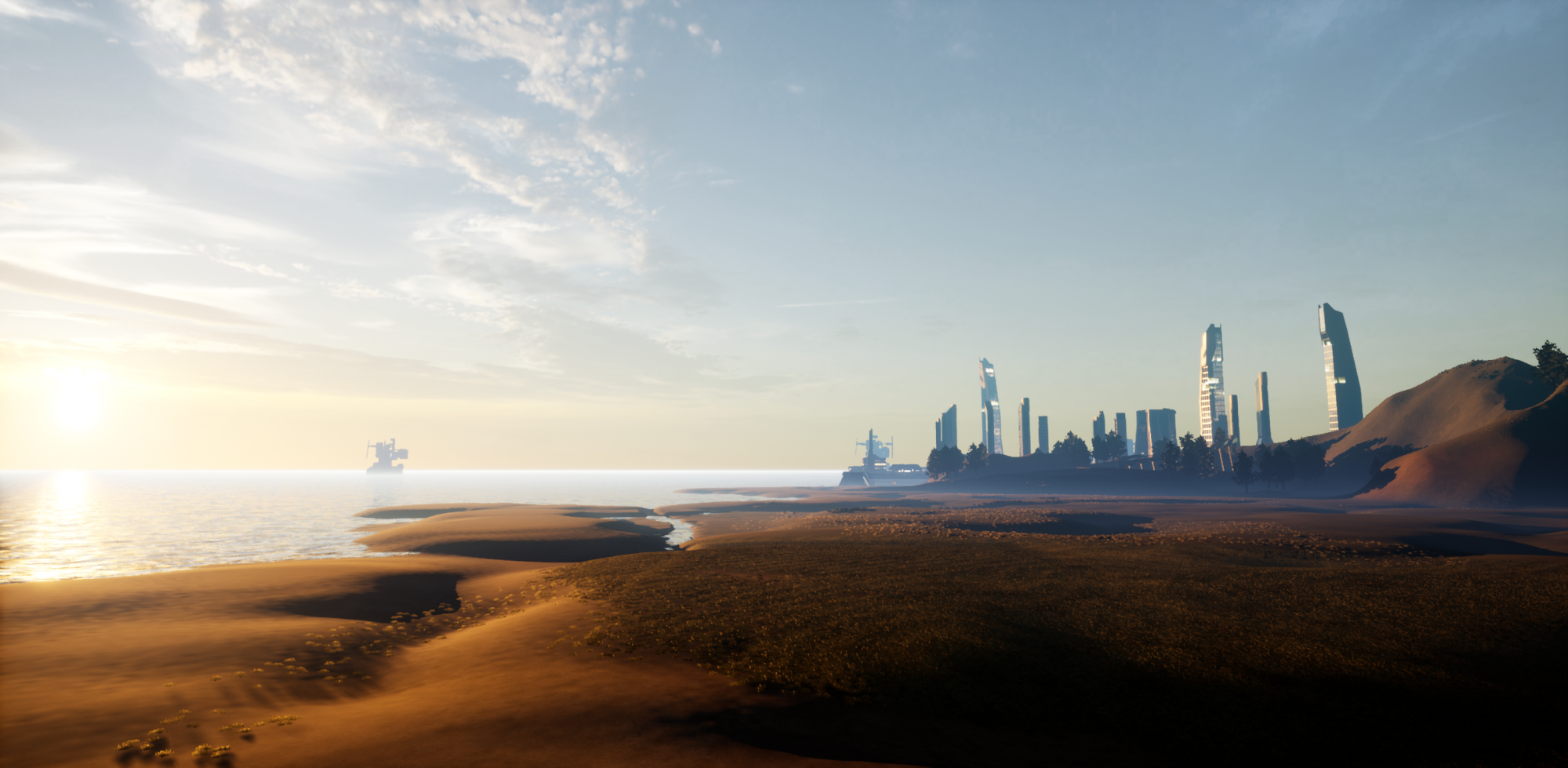

We have now ready a first draft of the user interface for entering the platform, creating your private user space and a part of the open world. We are currently building a database for interior design. So a user would be able to alter his or her space with furniture, paintings and such. To get the best visual quality, we still need to work on raytracing and animations. But we are getting there. In the video, you see a first glance of how to enter the platform, choose an avatar to represent yourself, type in your address and then walk around in your private space. And then lastly enter the open world where you see a glimpse of the city we are building.

When will it be ready?

Great question and I wouldn’t be much of a CTO if I wouldn’t have a very specific idea about our schedule. But.. like all studio’s we need to be careful what we promise. So.. well…yeah..prototype ready first quarter 2021. There I said it but don’t bite my head off if we need a bit more time :).

Well, a lot of text to go through hope you found it an interesting read love to hear from you guys, what you think.

Stay healthy and until next time!