What if you could use AI, simulation engines and GPU’s and make decisions much like Tony Stark when using Jarvis? That would be awsome, wouldn’t it? If we talk about technology, we often give reference to devices that make our lives easier or better. Devices like a smartphone or solar panels, but since the rise of personal computers with technology, we also refer to software. Examples of these kinds of technologies are artificial intelligence (AI), simulation and game engines.

These three specific will change our world in the coming years. More and more, we will simulate the world around us so that we can make better decisions. And we will do this just at home with devices you already know like smartphones, tablets, tv’s, game consoles and so on. If we were able to create this virtual world which you hardly can distinguish from the real thing, it would give us numerous possibilities to research the consequences of decisions, before we apply them for real. This ability will be at our doorstep available to literally everybody. We believe if people would really understand the consequences of decisions, they will take the right course of action. And by doing so, creating a better world for everybody.

Artificial Intelligence

For many, this is still a technology far away from any practical utilisation. Although we all continuously use it. What to think about Spotify where an AI is used to show you the music you like. And come to think of it, the same goes for most media like Youtube, Netflix or sites that offer products like Amazon. The general public applies less attention to the progress in specific parts of AI like Natural Language Processing (NLP), the part of deep learning that focusses on communication between humans and AI.

An excellent example of this is the significant achievement of the Google team (don’t forget to turn on your sound). Or the case of NVIDIA where communication is also a facial expression of the AI. And by doing so, created an even more natural feel when you communicate with such an AI. For obvious reasons they called this AI “Jarvis”.

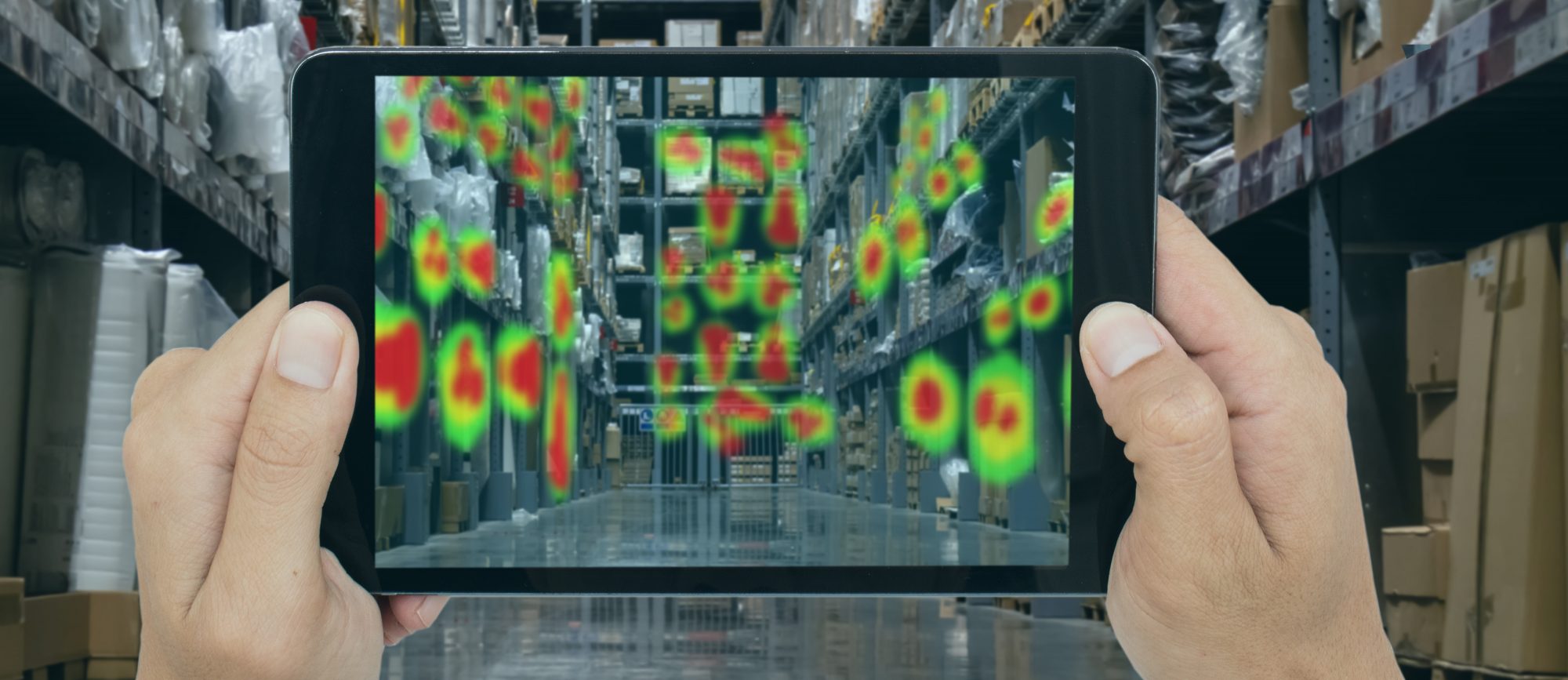

These are just examples of NLP models. But there is so much more. E.g. deep learning for image recognition or analysing unlabeled data. The later will be a great help in using datasets like point clouds, which are massive datasets with unlabeled data regarding real-life objects. The best of all these developments is that AI researchers put their latest achievements free or almost free available to use, so everybody can use it or help develop it.

The future is better than the past, is exciting and one where you want to be part of.

Simulation engines

A lot happens in the area of simulating of events happening in the real world. With events, we mean physics events like heat generation or how solar panels change sunlight into electricity and e.g. the behaviour of people how they move inside buildings. This real-time simulation also permits automation of design tasks like the development of layouts, MEP design, construction design and so forth.

The basis of all these developments with simulation engines is open-source software. A simulation engine is mostly a math or physics calculation tool that can run algorithms in real-time. Open-source means that the source code of the software is published for everyone to use, doesn’t mean its free of charge by the way. The later is a fantastic development. Nothing develops as fast if lots of people join in this development. This is truly amazing and helps the development of automation of data analysis tremendously. Rhino with Grasshopper is an example of such open-source software.

With this software, you can execute automated tasks for data analysis and even do this in real-time. You could e.g. connect an engine to a public database, like a weather station, KNMI, in The Netherlands or Pdok with all kinds of public data concerning environment like birds count or building information.

This opens the road for us to give any user the ability to use all this data. So everybody can take charge of major decisions like altering your home or finding a school for your kids. You could in real-time perform a digital “try-before-you-decide”.

In the future, we will have video games so realistic you won’t be able to tell the difference between games and reality.

Elon Musk

Game-engines

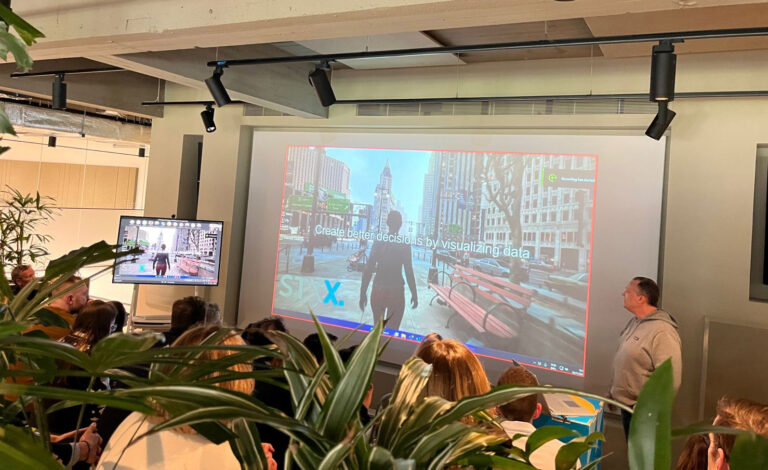

Oké, so now we talked about the automation of tasks, simulation of real-life events and AI. But how then can everybody utilise these new technologies? Well, the thing we need to do next is to visualise it in such a way that everybody can understand what is going on. This is the area of game development. This field is already for decades busy with visualising virtual environments for large groups of people at the same time. Such games are called massive multi online (MMO) games. Fortnite from Epic Games INC. is an example of such a game. The software they use for this is a game engine. And as you guessed, yes this too is open-source software. So again, everybody can use this to create tools. And what can such a game engine then do for me you ask? Well check the video where they explain to what extend they can visualise with photorealistic quality, this is really a big achievement. If we couple our analysed real-time data to a game engine, we can visualise our simulations in such a way everybody can understand. Numbers are hard to grasp, but a visual experience is easy for most people. At the moment we use in our development Unreal engine 4, but we can’t wait until version 5 will be available. And the best thing is with something that is called pixel steaming we stream the content to any device like, phone, tablet, TV, desktop, VR.

It’s not only the software that is making such huge steps in development but also the hardware. NVIDIA e.g. has launched, about two years ago, a graphics card with real-time ray-tracing. This not only means a capability to generate images up to 8k in quality but also that objects in the virtual world react to light as in real life. Giving people a very realistic experience inside such an environment. For us, these astonishing achievements were the sign to start on this path an get going to build the platform.

Is technology going to save our planet?

Yes! We believe so. We know that we cannot engineer nature. Enough examples of interference by humankind with nature were we made mistakes. Not intensionally but nonetheless harmful actions, like salmon farms in the rivers of Norway. We tried to keep up with salmon demand, by growing them like weed but as it turns out that does more harm than good.

But we have success with engineering also, think of solar panels or wind turbines, electric cars and hydrogen fuel cells. However, the relevance of the latter has become debatable with the coming battery improvement by Tesla. These technologies will help to reduce the CO2 output of us all and make a successful transition to all-electric possible. But we need to speed this transition up, very fast also. Nature is telling us this. The technology described here will help. Because if you are aware of which decisions you can make in altering your house or buying a car and the effect that has on saving our planet, we believe that you will make the right decision. It’s only a matter of presenting and experiencing the data, so you understand what is going on. When you “try-before-you-decide” and experience the effect it has on the world, how could you not make the right decision, right?

Here we show you some rudimentary examples of the tool we create. For obvious reasons, our platform and the AI that it will hold for you is called Styx.